Eyeline Studios, powered by Netflix, announced DifFRelight, a diffusion-based framework capable of relighting complex facial performances. Their research features a method of translating flat-lit facial captures into images and dynamic sequences with precise lighting control that reproduces even complex effects like eye reflections or self-shadowing. More on DifFRelight by Netflix Eyeline Studios below.

Surely, you’ve already heard about AI relighting features, even if you don’t encounter VFX or color grading regularly. For instance, DaVinci Resolve has integrated such a tool for artificially relighting scenes in post-production since last year. The new DifFRelight by Netflix Eyeline Studios is a different framework that is targeted more toward experts in visual effects. However, seeing their results may be interesting even for those of us who are not especially technically savvy.

DifFRelight by Netflix Eyeline Studios: essentials

DifFRelight is not a ready-made tool or a button that users can click to change the lighting of a scene (at least, for now). It is a framework presented by Eyeline Studios here. Developers demonstrate a method that lets them take flat-lit input and change it into a complexly lit scene. Their examples show different facial performances and how the novel lighting situation realistically supports them while preserving detailed features such as skin texture and hair.

About the method

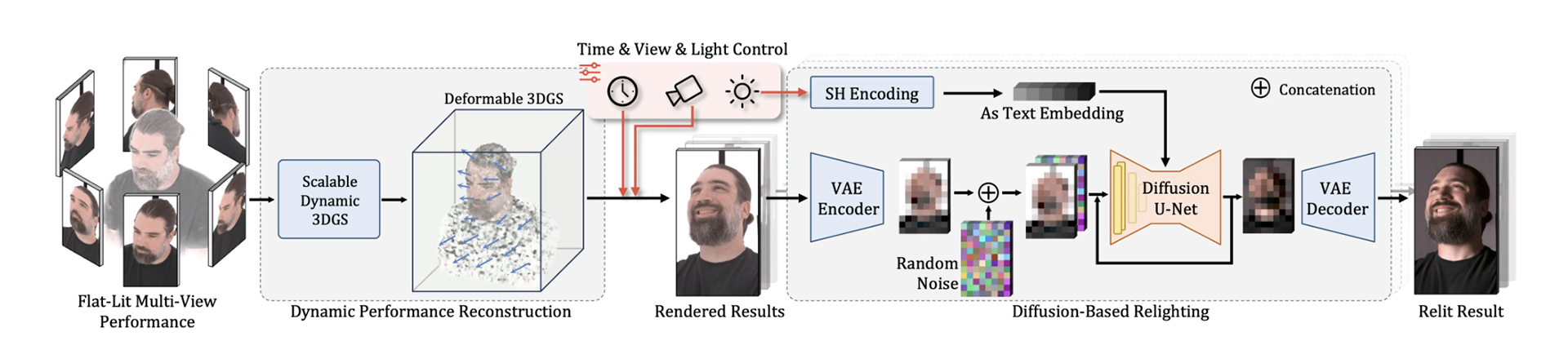

In the proposed workflow, researchers use a performance rendered from a GS reconstruction (“GS” meaning “Gaussian Splatting”) as input, resulting in a flat-lit video.

The Definitive Guide to DaVinci Resolve

Starting with multi-view performance data of a subject in a neutral environment, we train a deformable 3DGS to create novel-view renderings of the dynamic sequence. These serve as inputs for a diffusion-based relighting model, trained on paired data to translate flat-lit input images to relit results based on specified lighting.

A quote from the framework explanation

Then, the flat-lit input goes through a pre-trained diffusion-based image-to-image translation model. This model, according to Eyeline Studios, allows for the construction of new lighting conditions, with adjustments in light size and direction. In general, their presented approach enables relighting from free viewpoints and unseen facial expressions.

If you want to understand this process or how the model is trained in more technical detail, you can access the research paper here.

What is 3D Gaussian Splatting?

As noted by commenters, DifFRelight marks Netflix’s first 3DGS publication. The technique, called 3D Gaussian Splatting, has already been with us for a while. Some AI companies, like Luma AI, for instance, integrated it instead of the NeRF technology – to achieve more realistic and fast 3D scans of real-life environments. So how does it work?

I prefer to trust specialists from the VFX field to explain this topic, so here is a short 3-minute video from a YouTube channel that I follow and whose creators work a lot with 3D Gaussian Splatting:

What do you think about DifFRelight by Netflix Eyeline Studios? And if you work in the VFX field, how will such a framework affect your actual workflow? What potential does it hold for the future, in your opinion? Share your thoughts with us in the comments below! (Knowing that topics around AI always ignite edgy discussions, we kindly ask you to stay polite to each other and to us).

Feature image source: Netflix Eyeline Studios