The rapid development of generative AI will either excite you or make you a bit uneasy. Either way, there is no point in ignoring it because humanity has already reached the point of no return. The technical advancements are here and will undoubtedly affect our industry, to say the least. As filmmakers and writers, we take it upon ourselves to responsibly inform you as to what the actual state of technology is, and how to approach it most ethically and sustainably. With that in mind, we’ve put together an overview of AI video generators to highlight their current capabilities and limitations.

If you’ve been following this topic on our site for a longer time, you might remember our first piece about Google’s baby steps toward generating moving images from text descriptions. Around a year ago, the company published their promising research papers and examples of the first tests. However, Google’s models were not yet available to the general public. Fast forward to now, and not only has this idea become a reality, but we have a plethora of working AI video generators to choose from.

Well, “working” is probably too strong a word. Let’s give them a try, and talk about how and when it is okay to use them.

AI video generators: market leaders

The Art of Visual Storytelling

The first company to roll out an intelligent AI model capable of generating and digitally stylizing videos based on text commands was Runway. Since spring 2023, they have launched tool after tool for enhancing clips (like AI upscales, artificial slow motion, removing the background in one click, etc.), which made a lot of VFX processes simpler for the independent creators out there. However, we will review only their flagship product – a deep-learning network, Gen-2, that can conjure videos upon your request (or at least it tries to).

While Runway indeed still runs the show in video generation, they now have a couple of established competitors. The most well-known one is Pika.

Pika is an idea-to-video platform that utilizes AI. There’s a lot of technical stuff involved, but basically, if you can type it, Pika can turn it into a video.

A description from their website

As the creators of Pika emphasize, their tech team developed and trained their own video model from scratch, and you won’t find it elsewhere on the market. However, they don’t disclose what kind of data it was trained on (and we will get to this question below). Until recently, Pika worked only through the Discord server as a beta test and was completely free of charge. You can still try it out this way (just click on the Discord link above), or head over to their freshly launched upgraded model Pika 1.0 in the web interface.

Both of these companies offer a free basic plan for their products. Runway allows only limited generations to test their platform. In the case of Pika, you get 30 credits (equals 3 short videos), which refill every day. Also, the generated clips have a baseline length (4 seconds for Runway’s Gen-2, 3 seconds for Pika’s AI), that can be extended a few times. The default resolution differs from 768 × 448 (Gen-2) to 1280 x 720 (Pika). However, you can upscale your results either directly in each software (there are paid plans for it), or by using other external AI tools like TopazLabs.

What about open-source projects?

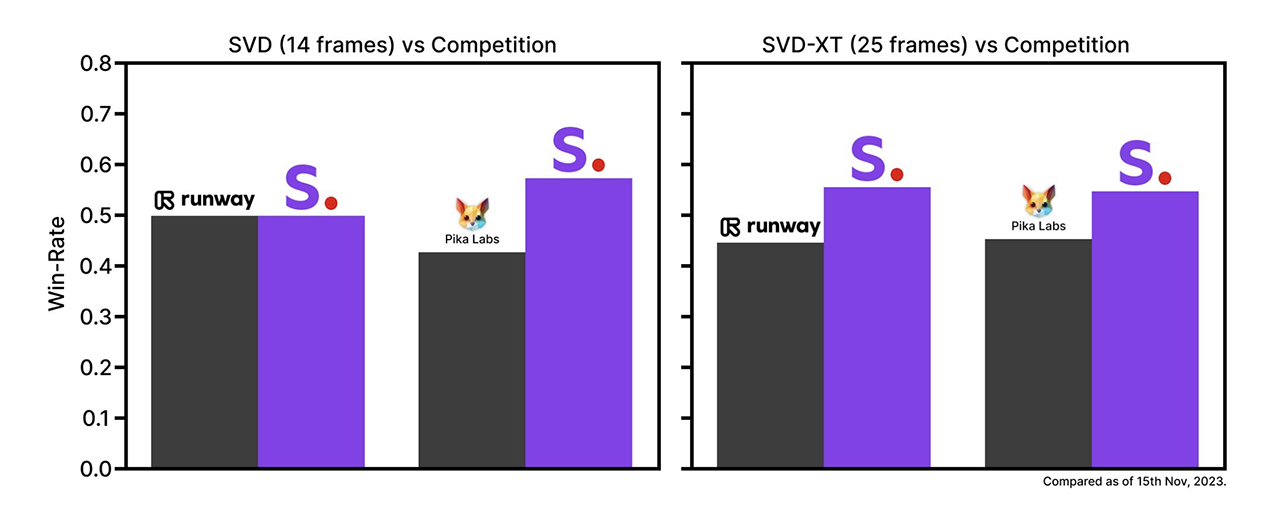

This past autumn, another big name in the image generation space entered the video terrain. Stability AI launched Stable Video Diffusion (SVD) – their first model that can create videos out of still images. Like their other projects, it is open source so you can download the code on GitHub, run the model locally, and read everything about its technical capabilities in the official research paper. If you want to take a look at it without struggling with AI code, here’s a free online community demo on their HuggingFace space.

For now, SVD consists of two image-to-video models that are capable of generating videos at 14 and 25 frames at customizable frame rates between 3 and 30 frames per second. As the creators claim, external user preference studies showed that Stable Video Diffusion surpasses the models from the competitors:

Well, we’ll see if that evaluation stands the test. At the moment, we can only compare it to the other image-to-video generative tools. Stability AI also plans to roll out a text-to-video model soon, and anyone can sign up for the waitlist here.

Generating videos from text – side-by-side comparison

So, let’s get the experiments going, shall we? Here’s my text prompt: “A woman stands by the window and looks at the evening snow falling outside”. The first result comes from Pika’s free beta model, created directly in their Discord channel:

Not so bad for an early research launch, right? The woman cannot be described as realistic, and for some reason, the snow falls everywhere, but I like the overall atmosphere and the lights outside. Let’s compare it to the newer model of Pika. The same text description with a different video result:

Okay, what happened here? This woman with her creepy plastic face terrifies me, to be honest. Also, where did the initial window go? Now, she just stands outside in the snow, and that’s definitely not the generation I asked for. Somehow, I like the previous result better, although it’s from the already obsolete model. We’ll give it another chance later, but now it’s Gen-2’s turn:

Although Gen-2 also didn’t manage to keep the falling snow solely outside the window, we can see how much more cinematic the output feels here. It’s the overall quality of the image, the light cast on the woman’s hair, the depth-of-field, the focus… Of course, this clip is far from spotless, and you would immediately recognize that it was generated by AI. But the difference is huge, and the models will continue learning for sure.

AI models are learning fast, but they also struggle

After running several tests, I can say that video generators struggle a lot. More often than not they produce sloppy results, especially if you want to get some lifelike motion within the frame. In the previous comparison, we established that Runway’s AI generates videos with higher-quality imagery. Well, maybe they just have a better still image generator because I couldn’t get a video of a running fox out of this bugger, no matter how many times I tried:

Surprisingly, Pika’s new AI model came up with a more decent result. Yes, I know the framing is horrible, and the fox looks as if it ran out of a cheap cartoon, but at least it moves its legs!

By the way, this is a good example to demonstrate how fast AI models learn. Compare the video above (by Pika 1.0) to the one below that I created with the help of the previous Pika model (in Discord). The text input was the same, but the difference in the generated content – drastic:

Animating images with AI video generators

A slightly better application idea for current video generators, in my opinion, is to let them create or animate landscape shots or abstract images. For instance, here is a picture of random-sized golden particles (sparks of light, magic, or dust – it doesn’t matter) on a black background that Midjourney V6 generated:

Each of the AI video generators mentioned in the first part of this review allows uploading a still image and animating it. Some don’t require any additional text input and go ahead on their own. For example, here’s what Runway’s Gen-2 came up with:

What do you think? It might function well as a background filler for credits text, but I find the motion lacks diversity. After playing around, I got a much better result with a special feature called “Motion Brush”. This tool, integrated as a beta test into the AI model, allows users to mark a particular area of their still image and define the exact motion.

Pika’s browser model insisted on the additional text description with the uploaded image, so the output didn’t come out as expected:

Regardless of the spontaneous explosions at the end, I don’t like the art of motion and the camera shake. In my vision, the golden particles should float around consistently. Let’s give it another go and try the community demo of Stable Video Diffusion:

Now we’re talking! Of course, this example has only 6fps and the AI model obviously cannot separate the particles from the background, but the overall motion is much closer to what I envisioned. Possibly, after extensive training followed by some more trial and error, SVD will show a satisfactory video result.

Consistency issues and other limitations

Well, after looking at these examples, it’s safe to say that AI video generators haven’t yet reached the point where they can take over our jobs as cinematographers or 2D/3D animators. The frame-to-frame consistency is not there, the results often have a lot of weird artifacts, and the motion of the characters (be it human or animal) does not feel even remotely realistic.

Also, at the moment, the general process requires way too much effort to get a decent generated video that’s close to your initial vision. It seems easier to take a camera and get the shot that you want “the ordinary way”.

At the same time, it is not like AI is going to invent its own ideas or carefully work on framing that is the best one for the story. Nor is that something non-filmmakers will be constantly aware of while generating videos. So, I reckon that applying visual storytelling tools and crafting beautiful evolving cinematography shall remain in our human hands.

There are also some other limitations that you should be aware of. For example, Stable Video Diffusion doesn’t allow using their models for commercial purposes. You will face the same issue with Runway and Pika on a free-of-charge basis. At the same time, once you get a paid subscription, Pika will remove their watermark and grant commercial rights.

However, I advise against putting generated videos into ads and films for now. Why? Because there is a huge ethical question behind the use of this generative AI that needs regulatory and attribution solutions first. Nobody knows what data they were trained on. Most possibly, the database consists of anything to be found online, so a lot of pictures, photos, and other works of artists who haven’t given their permission nor have gotten any attribution. One of the companies that try to handle this issue differently is Adobe with their AI model Firefly. They also announced video AI tools last spring, but it’s still in the making.

In what way can we use them to our advantage?

Some people say that AI-generated content will soon replace stock footage. I doubt it, to be honest, but we’ll see. In my opinion, the best way to use generative AI tools is during preproduction, for instance, to quickly communicate your vision. While text-to-image models are a handy go-to for gathering inspiration and creating artistic mood boards, video generators could become a quick solution for making previsualization. If you, like me, normally use your own poor scribbles that you’ve put together one after the other to create a story reel, then, well – video generators will be a huge upgrade. They don’t produce perfect results, as we’ve seen above, but that’s more than enough to draft your story and previs it in moving pictures.

Another idea that comes to mind is animating your still images for YouTube channels or presentations. Nowadays, creators tend to add digital zoom-ins or fake pans to make their photos appear more dynamic. With a little help from AI video generators, they will have more exciting options to choose from.

Conclusion

The creators of text-to-image AI Midjourney also announced, that they are working on a video generator and are planning to launch it in a few months. And there most certainly will be more to come this year. So, we can either look the other way and try to ignore it, or we can embrace this advancement and work together on finding ethical applications. Additionally, it’s crucial to educate people that there will soon be an increase in fake content, and they shouldn’t believe everything they see on the Internet.

What are your thoughts on AI video generators? Any ideas on how to make this technical development a useful tool for filmmaking (instead of only calling AI an enemy that will destroy our industry?) I know that this topic provokes heavy discussions in the comments, so I ask you: please, be kind to each other. Let’s make it a constructive exchange of thoughts instead of a fight! Thank you!

Feature image: screenshots from videos, generated by Runway and SVD