During the annual Google I/O 2024, which has just been broadcast, the company shared their latest news and launches. Among them is Google’s Veo, which is said to be their “most capable generative video model to date.” It seems from the published showcases that the new AI tool can indeed compete with OpenAI’s Sora, which brought massive buzz into the filmmaking world earlier this year. Let’s take a look at what Google’s Veo is capable of and at the company’s plans for its release!

We’ve been following Google’s attempts to generate videos with the help of AI for years. Remember their Imagen and Phenaki models? And what about Lumiere, which was introduced just this January? None of these neural networks were ever released to the public. However, as it turns out, the company’s research has paid off. According to the announcement, Veo builds upon previous experiments in this area. The results demonstrated both during the event and in the official press text look impressive.

Key details about Google’s Veo

To contend with the tight competition in the market, Google had to include all the features their predecessors did and go beyond that. Thus, Google’s Veo is said to generate “high-quality, 1080p resolution videos that can go beyond a minute, in a wide range of cinematic and visual styles.” Developers made sure their model understands complicated text prompts, is capable of generating hyper-realistic imagery, and achieves frame-to-frame consistency. Here’s one of the published examples picturing a lone cowboy on his horse, riding across an open plain with a beautiful sunset:

This video clip doesn’t really shock us anymore, only because we’ve already seen similar showcases by OpenAI’s Sora. If Google’s Veo had come out, say, at the beginning of the year, we would have been blown away by this tech. It’s safe to say, though, that Sora has just got itself a decent competitor, so AI developers are bound to push the boundaries further and further.

Cinematography for Directors

Control over the shots for filmmakers

What Google’s team mentions as one of Veo’s major strengths is an unprecedented level of creative control. The neural network can allegedly understand prompts for all kinds of cinematic effects. This means users will be able to include in their description such filmmaking terms as “time-lapse”, “aerial shot”, “panning shot”, and others, and get exactly the desired motion. Like here:

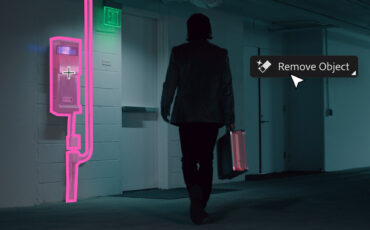

Editing videos with Google’s Veo

Another possibility to control your results is the editing feature that Google’s Veo promises to include. In the application, it sounds similar to Adobe’s Generative Fill, which was recently announced for video as well. This means you’ll be able to upload your initial clip into Veo (it could be original footage or a generated video – it doesn’t matter) and alter some of its components. For instance, here is a drone shot of the Hawaiian jungle coastline, also created by Veo:

For the sake of the experiment, developers fed into the deep-learning model an editing command by placing it at the end of the prompt: “Drone shot along the Hawaiian jungle coastline, sunny day. Kayaks in the water.”

According to Google, Veo also supports masked editing, so users can specify the area of the video they want to adjust. At the same time, like other video generators, the new model accepts a still image as input and can animate it for you.

Sequencing video clips

What was really striking in the announcement of OpenAI’s Sora is its ability to create sequences of clips, which make up an entire scene. Of course, Google couldn’t avoid this feature either. For that, Veo can use a single prompt, or a series of prompts, defined by the user. The following example is the result of a variety of commands that together tell a story. Namely:

- A fast-tracking shot through a bustling dystopian sprawl with bright neon signs, flying cars and mist, night, lens flare, and volumetric lighting.

- A fast-tracking shot through a futuristic dystopian sprawl with bright neon signs, starships in the sky, night, and volumetric lighting.

- A neon hologram of a car driving at top speed, speed of light, cinematic, incredible details, volumetric lighting.

- The cars leave the tunnel, back into the real-world city of Hong Kong.

As you can see, the video is not flawless. However, if it is indeed an “unedited raw output from Veo”, as the description states, then the level of consistency, language understanding, and control does intimidate me. What about you?

Google’s Veo take on ethics and our disclaimers

Google’s goal with Veo is to “help create tools that make video production accessible to everyone.” We know from previous experience though that generative AI (especially for video) always sparks a huge discussion around ethics. What if this tool is misused? How can we know what material the new model was trained on and whether they paid the original artists? (No information on that, by the way). And above all, how the heck will we distinguish reality from AI’s products, if it is capable of such incredible output?

These questions don’t cease to pop up. Google mentions that they want to bring Veo to the world in a responsible way, so all the videos generated will be watermarked using SynthID (their tool for identifying AI-generated content and watermarking). Also, they mean to implement safety filters that help “mitigate privacy, copyright, and bias risks.”

We want to also remind you that the videos in this article are just the results that have been carefully selected by Google’s team for marketing purposes. We have no evidence so far as to whether all of Veo’s output will have the same quality and consistency, and no information about its drawbacks and limitations. To do deeper research on this tool, we have to try it ourselves first. So, let’s wait for the beta, shall we?

Availability

Google’s Veo is not out to the public yet. However, the company wants to gather feedback from creators and filmmakers. They already showcased one of such collaborations during their keynote:

Over the coming weeks, Google will select some other creators and give them access to beta tests of Veo and some of its features through VideoFX, a new experimental tool at labs.google. You can join the waitlist now by signing up here. To see other showcases of the latest text-to-video models, head over to their official announcement.

The discussion is open! What do you think about Google’s Veo announcement? Will it be able to compete with Sora? Would you like to try it out? What concerns do you have? Let’s talk in the comments below (but please, be kind to each other and open to different opinions, thank you!)

Image source: a bunch of stills from the videos, generated by Google’s Veo.