Runway is one of the leading companies in the development of artificial intelligence tools and introduced the winners of their first annual AI film festival that took place this winter. Of the hundreds of submissions, judges picked ten finalists and released their work to the public. The main goal of this competition was to celebrate “the art and artists making the impossible at the forefront of AI filmmaking.” We were curious and analyzed how different techniques were integrated into the winning films: from AI-generated art to whole 3D scene scans. Let’s take a look at the amazing new technology now available to any creator.

Requirements were that videos were from 1 to 10 minutes long, and one of the main festival criteria was naturally to use neural networks in the work. There was no strict definition of which AI to use, or how to feature it in the film, so the variety of tools used in the winning videos is really impressive. The use of state-of-the-art technology counted as only 25% of a film’s success – judges also took into account the quality of the overall film composition, originality, and of course, the artistic message.

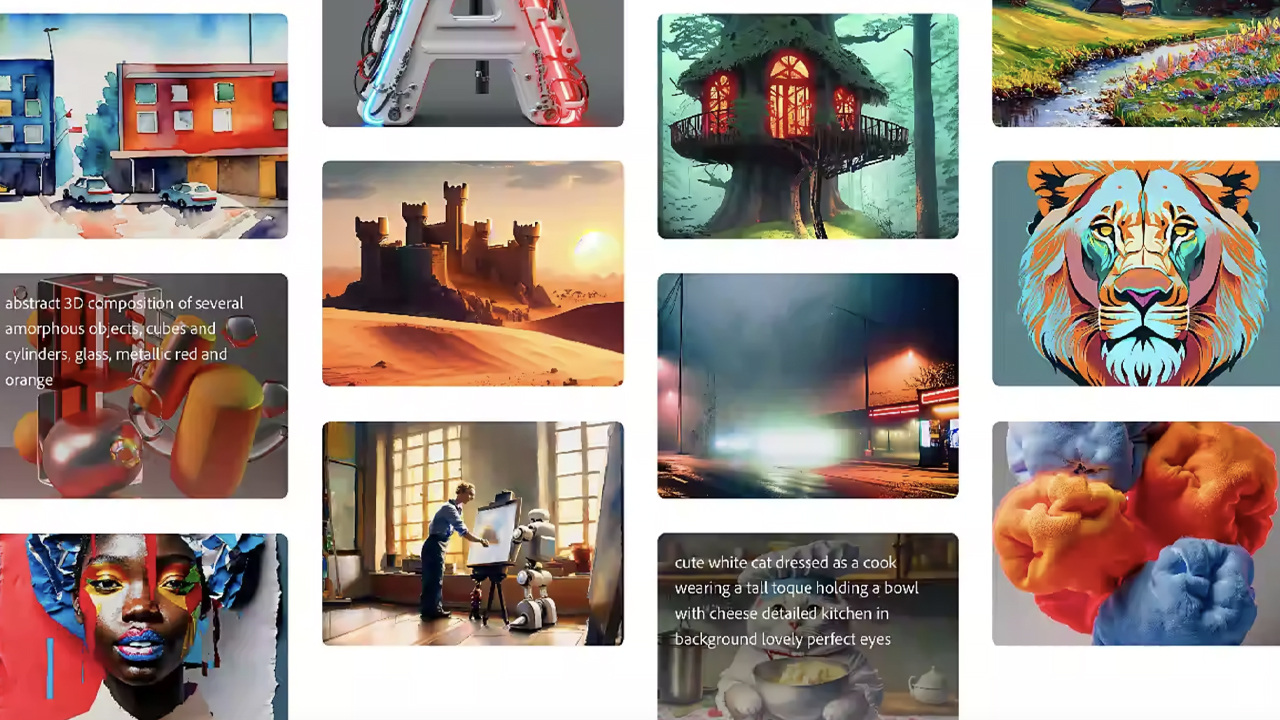

Using art generators as part of AI filmmaking

One of the shorts that impressed me most is “PLSTC” by Laen Sanches (we will embed it below). Basically, it’s just a rapidly edited sequence of hundreds of pictures, which illustrate different ocean inhabitants wrapped in plastic and unable to escape. The director took strong images, created by AI art generator Midjourney (which we wrote about here), upscaled them with help of the AI tool Topaz Labs, and put them together slightly animated. By precisely choosing the matching visuals, he achieved a very definite and coherent film atmosphere. Not a word is said, but the message is crystal clear, and it hurts. Dramatic classical music also helps evoke deep emotions, and the result is a small narrative wonder. It didn’t place for any of the prizes, but it is definitely worth watching.

I like the video idea and the aesthetics of the final result. But it’s important to note: I don’t think we should go in this direction when creating films, at least for now. There is a lot of discussion about using AI-generated art in actual projects. You should be aware that the developers of tools like Midjourney or Stable Diffusion train their neural networks on all possible content, regardless of who has the rights to it. So, taking the output and claiming it as your own creation is neither ethical or fair towards the original artists, who don’t receive attribution or compensation. In my opinion, it’s okay to use those AI tools for research, mood boards, or in general, during the idea development phase.

Adventure Filmmaking with Russ Malkin

Nevertheless, there is hope. Last week, Adobe launched Firefly, which they designed to tackle exactly this problem. The AI art generator promises to use only legitimate and inclusive datasets. For example, developers trained Firefly’s deep-learning model on Adobe Stock images, openly licensed footage, and public domain content where the copyright has expired. But there is still room for improvement. Some filmmakers have urged Adobe to give Stock creators an option to choose how and whether they want their footage to be used, and if so, to compensate for it as well.

I believe it’s the next step, but the software is still very new. It’s not available for public use yet, but everyone is welcome to register for beta access by showing their interest here.

3D scans of real scenes – AI filmmaking from a different perspective

Another festival entry that caught my interest was the silver-prize winner “Given Again” by Jake Oleson. His film takes the spectator on a beautiful journey through everyday scenes, which look more like dreamy memories, frozen in time. It’s atmospheric, artistic, magical-everything but traditional storytelling. And what impresses me most is that all sequences were created entirely by using the NeRF technology of Luma AI. Watch it first, and then we will dive into the technical details:

Luma AI lets you create photorealistic, high-quality 3D models simply by using an iPhone (11 or newer). In their app, you easily capture an object, landscape, or the whole scene (like in the video example above) and then turn it into a detailed 3D environment. The most interesting thing about their scans is that they are based on so-called NeRF technology, which stands for “Neural Radiance Fields”. Put simply, it uses deep neural networks to learn the underlying 3D structure of an object by analyzing images or videos taken from different angles. Then the machine predicts the color and appearance of each point in 3D space and can synthesize a high-quality, extremely detailed model.

After it creates the 3D scan, you not only relive the moment but can also experiment with impossible camera moves in postproduction. “Given Again” is an exciting example of how this AI technology might enhance filmmaking, even on a tight budget.

AI-based effects in your video

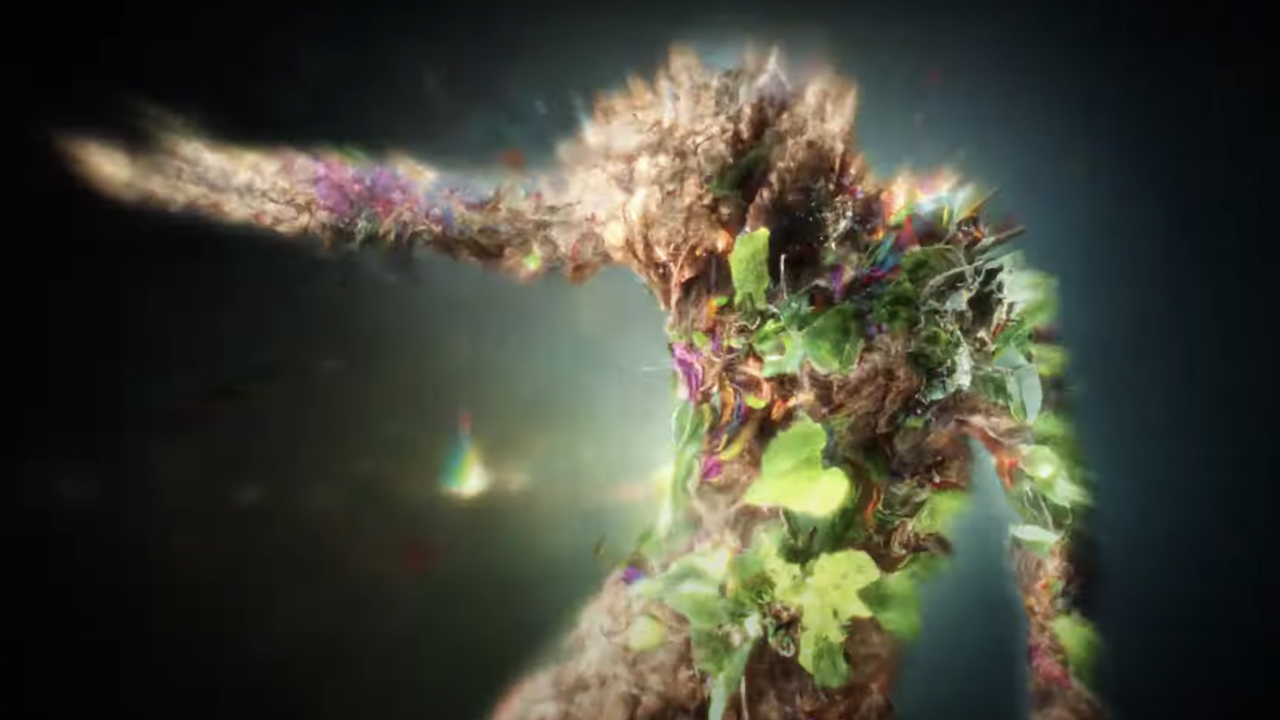

The grand prix of the Runway’s AI film festival went to Riccardo Fusetti for his work titled “Generation”. This is a very expressive and abstract piece, which combines contemporary performance with massive visual effects and philosophical voice-over text.

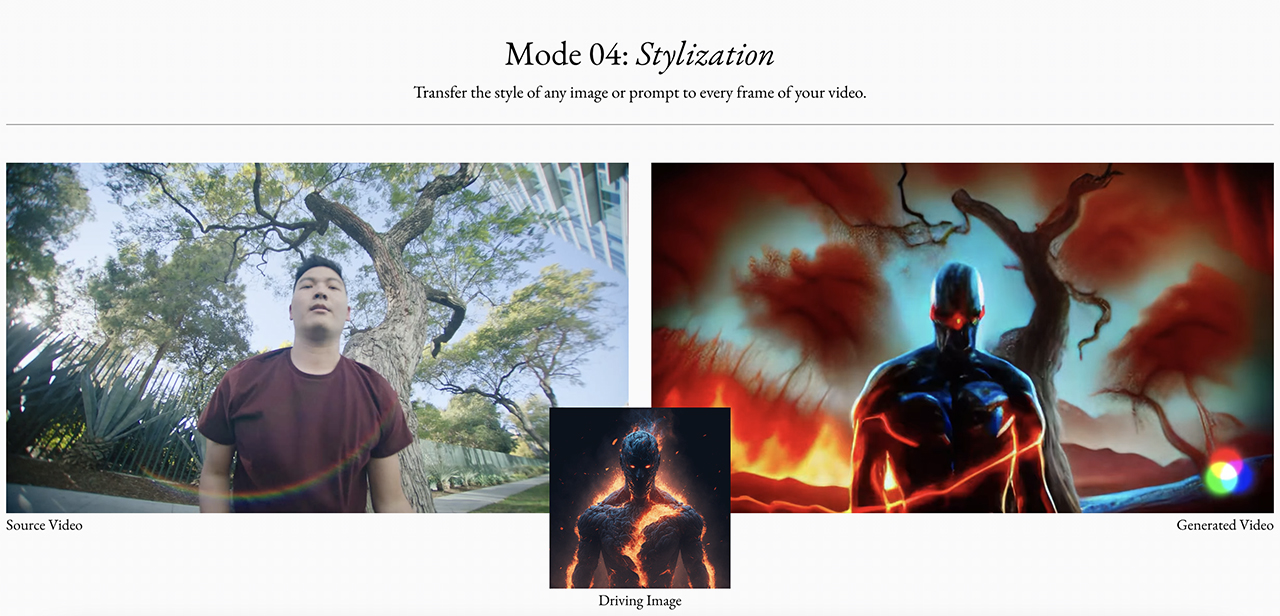

I’m not 100% sure which AI technology Riccardo used in this project, but it’s possible to get similar results with, for example, Runway’s Gen-1. Following quite a long beta-test period, it is finally available to the public. After registration, users may now try out the technology for free with a restricted amount of credits.

The initial idea behind Gen-1 is to generate new videos out of existing ones using a reference image or a text prompt, so it’s possible to apply a specific style to your clip. Turn simple forms (like shoe boxes) into complicated objects (like skyscrapers), or adjust only a masked object. VFX for everybody, and don’t leave anyone behind, so to speak.

Sounds crazy enough, but it’s just the beginning. There are currently various research projects experimenting with creating whole movies from text using AI. We wrote about Google’s artificial intelligence development, for example. Runway has also recently announced their new product Gen-2, which should be able to make videos from scratch. A whole new world is waiting for us just around the corner: exciting and horrifying at the same time.

Democratizing filmmaking through AI tools

There are many other examples of AI filmmaking on the festival page. And if you are interested in the opinions of renowned artists on this subject, you can watch a panel discussion with participants like Darren Aronofsky.

The main discussion concerns the fact that artificial intelligence is a very young and rapidly evolving field, and we have to take the right approach to it. It’s important to use it ethically and view it as a creative assistant-not something to take over our jobs. At the same time, everybody agrees that AI helps to democratize filmmaking. New technology offers tools that were previously unavailable to independent creators or for low-budget productions.

I think it’s an important point and the AI film festival confirms it. Among the finalists, you will see an official music video for A$AP Rocky’s song “Shittin’ Me” (which currently has 6,5 million views on YouTube) alongside a small, personal work by Sam Lawton, “Expanded Childhood”, where he creates illusionary AI extended environments using his own archive photos.

The future of filmmaking

One of the finalists–the short explainer video “Checkpoint”–compares the general reaction to the implication of AI with how 1830s painters furiously protested against photography when it was invented. As time went on, they learned to appreciate modern tools and even took a new turn at using them as a new art form, redefining its goal and meaning (it didn’t have to be photorealistic anymore).

There are still tons of open questions, for sure, but the development of artificial intelligence will affect filmmaking. It already does. So, instead of protesting, we’d better find out how to use it most ethically and fair. If we can manage that, AI can augment our creativity and give us a steady base for a new jump forward. So, what do you think? Let’s discuss this in the comment section below!

Feature image: a film still from “Generation” by Riccardo Fusetti. Image credit: Runway.